Frequently Asked Questions

Frequently asked questions about building Tools

How do I set default values for input parameters?

Click on the setting icon, located on the bottom right of the input component. Set the values, and click on “Set current value”.

How do I insert variables into CODE step?

Access to variables is possible via the params parameter. For instance, to access a variable called name,

use params.name is Javascript or params["name"] in Python.

How do I insert variables into the API step?

On your API component, change the body to edit as string and use {{}} to access variables.

How to make running a step conditional?

Click on the three vertical dot on the top right of the step and select “Add conditions”. See the full guide at Adding condition to a step.

Why do I have to use single or double quotations around variables in my prompts?

Such marks (e.g. single, double, triple quote marks) are not required nor have a direct functional utility.

It is a prompting technique that has been found to work well with LLMs.

Keep in mind that an LLM prompt is a long piece of text composed of instructions, examples, etc.

single or double or triple quote marks around a varible X are just to specify the scope of X (i.e. the beginning and end of the string X within the prompt).

A side note that the whole {{X}} brings the variable X into the prompt, meaning there won’t be {{}} around it when passed to the LLM.

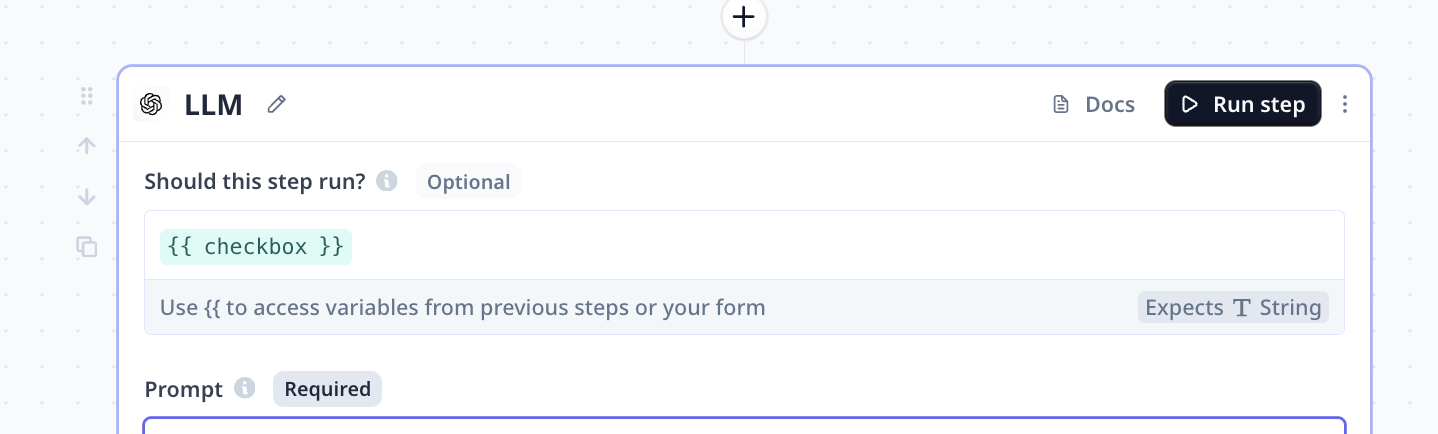

How do I use the Checkbox input component as a condition for running a step?

Add a condition to your step.

And use the {{checkbox variable name}} as the value for the condition. For example, under the default step name:

-

{{checkbox}}when checkbox is ticked -

{{!checkbox}}when checkbox is not ticked

How to run a step multiple time like a loop?

Click on the three vertical dot on the top right of the step and select “Enable foreach”. See the full guide at Loop through a step.

How to reduce hallucination for LLM?

Here are a list of steps to take to improve your experience with LLMs:

- Providing good and precise system prompt

- providing good prompt Tips on a good prompt

- Providing data as a background knowledge

- More experiment with LLMs

Why is the LLM output cut off in the middle of a sentence and how to fix it?

LLMs have the capacity for a limited number of tokens. At Relevance, we use ~90% for the prompt (including knowledge) and the rest for the output. This means, if your prompt/knowledge are very rich in the number of tokens, there will be not enough room for the full output.

In almost all such cases, the most relevant pieces of knowledge are fetched from the knowledge How to handle large text. Most relevant is a vector search applied to your knowledge to only select the best matching entries within the context to be fed to LLM. By default the top 100 matching entries are fetched. Decrease the page size parameter under advanced option in most relevant.

How do I set multiple outputs?

In the last step of your Tool, click on Configure output button. Disable “Infer output from the last step”.

- Using the

Add new output keyyou can add outputs - Using

{{}}you can access to variables and steps’ outputs

More details are provided at Output configuration

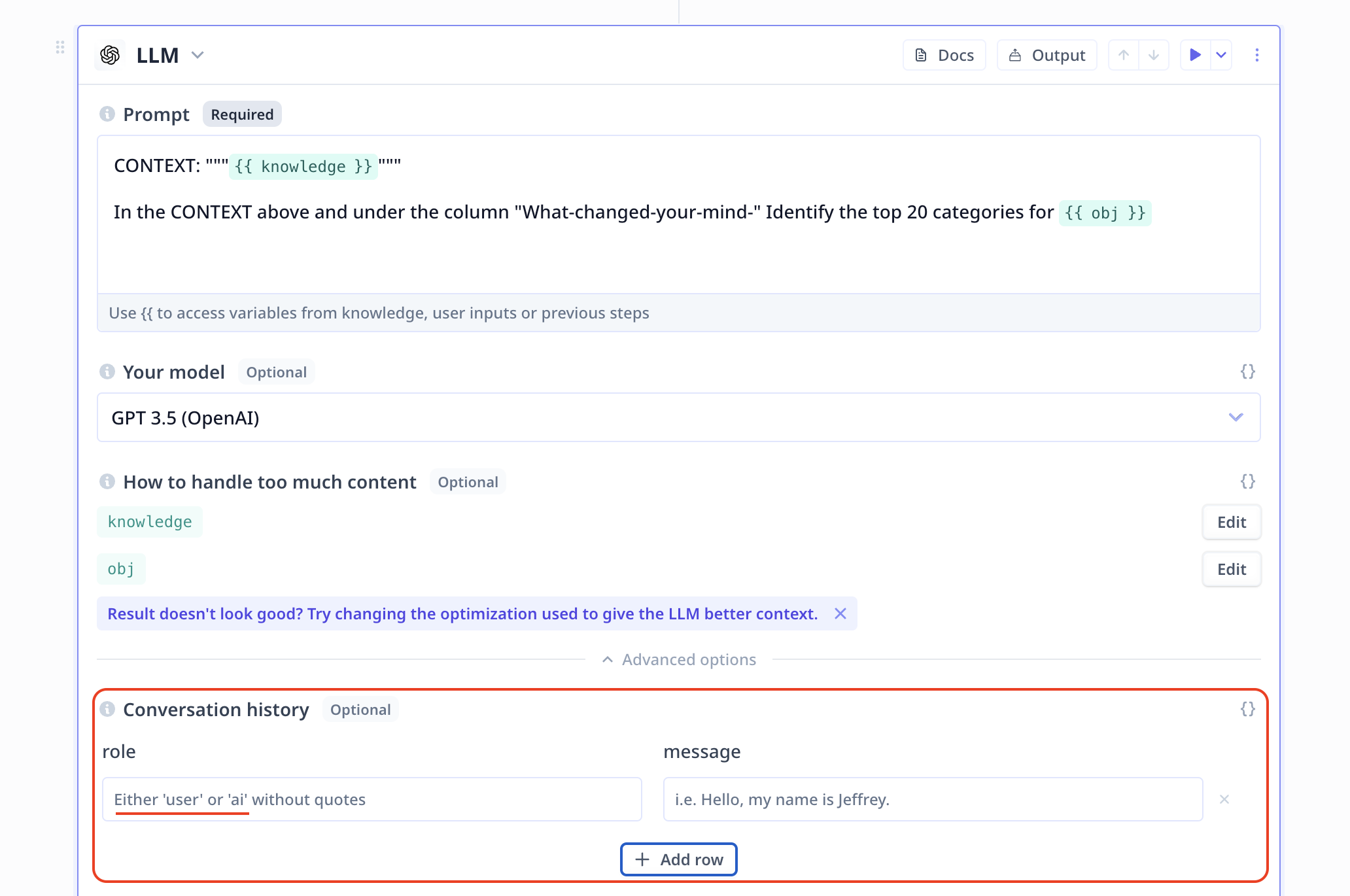

Error: Wrong chat history setup

Below errors can occur when “Conversation history” is not set correctly.

Studio transformation prompt_completion input validation error: must be array {"type":"array"} /history

Provide values for the conversation or use the x to remove it

Studio transformation prompt_completion input validation error: must be equal to one of the allowed values {"allowedValues":["user","ai","function"]} /history/0/role

Note that the only allowed roles are ai and user.

Error: Rate limit

429:

{"message":"Rate limit reached for default-gpt-3.5-turbo-16k

in organization org-JfRBhZhDGaEQPgWeVxj3OEGF on tokens per min. Limit: 180000

/ min. Current: 1 / min. Contact us through our help center at

help.openai.com if you continue to have

issues.","type":"tokens","param":null,"code":"rate_limit_exceeded"}

This error happens when the used API key is set to a different rate limit compared to what Relevance uses by default. Trying again with different intervals of pause helps with this issue.

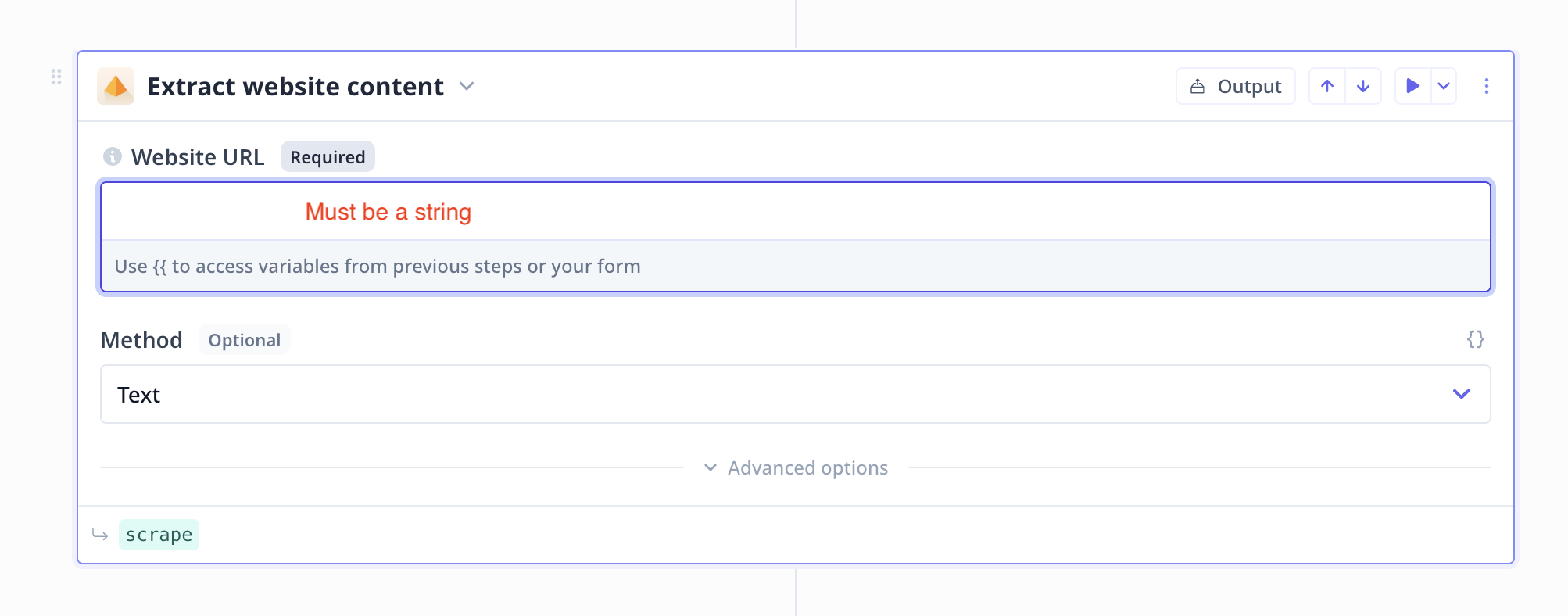

Error: URL issue

This error occurs when the input to “Extract website content” receives an input parameter that is not of type string (e.g. a URL).

Studio transformation browserless_scrape input validation error: must be string {"type":"string"} /website_url

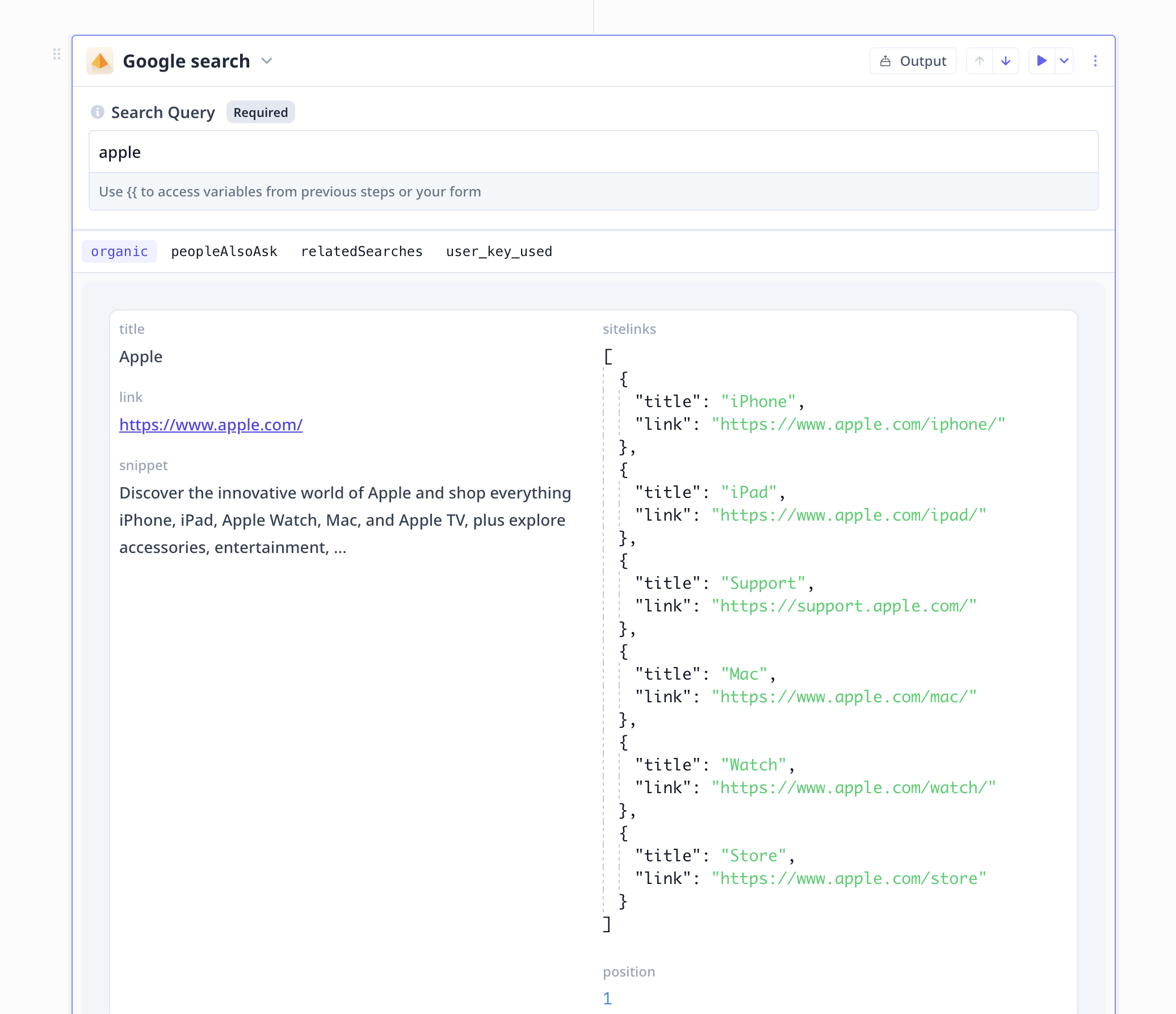

A common situation where this happens is when the output of another step (e.g. Google search as shown below) is used as the input to “Extract website content”. If the output is a list or an object similar to the example below, you need to access the URL field.

In our example, we can access the string URLs in three ways:

If the above result is the output of a google search step, accessing the first link is via google.organic[0].link

Error: Validators

At Relevance, you can set up validators for your LLM component to ensure that the output follows the desired format. The below error happens when the LLM’s output does not meet the criteria. Prompt engineering is the best way to handle such as situation. Try rewording your prompt and providing examples.

Prompt completion did not pass validation

Error: Credits

The following error indicates that the credits are below zero and you need to top up to be able to continue using the platform.

Organization Entitlement setting negative_credits : {"limit":-10000} does not allow this action.

Was this page helpful?